Abstract

While recent diffusion-based generative image codecs have shown impressive performance, their iterative sampling process introduces unpleasing latency. In this work, we revisit the design of a diffusion-based codec and argue that multi-step sampling is not necessary for generative compression. Based on this insight, we propose OneDC, a One-step Diffusion-based generative image Codec that integrates a latent compression module with a one-step diffusion generator. Recognizing the critical role of semantic guidance in one-step diffusion, we propose using the hyperprior as a semantic signal, overcoming the limitations of text prompts in representing complex visual content. To further enhance the semantic capability of the hyperprior, we introduce a semantic distillation mechanism that transfers knowledge from a pretrained generative tokenizer to the hyperprior codec. Additionally, we adopt a hybrid pixel- and latent-domain optimization to jointly enhance both reconstruction fidelity and perceptual realism. Extensive experiments demonstrate that OneDC achieves SOTA perceptual quality even with one-step generation, offering over 40% bitrate reduction and 20x faster decoding compared to prior multi-step diffusion-based codecs.

🔍 Overview

Multi-step sampling is not essential for image compression, One-Step is enough.

OneDC can compress images to text-level size (a 768x768 image with 0.24KB), but the reconstruction still retains strong semantic consistency and original spatial details.

(a) Text prompts (from GPT-4o) struggle to capture complex visual semantics, and existing text-to-image models have limited generation fidelity. (b) Hyperprior guidance yields more faithful reconstructions. (c) Semantic distillation further improves object-level accuracy.

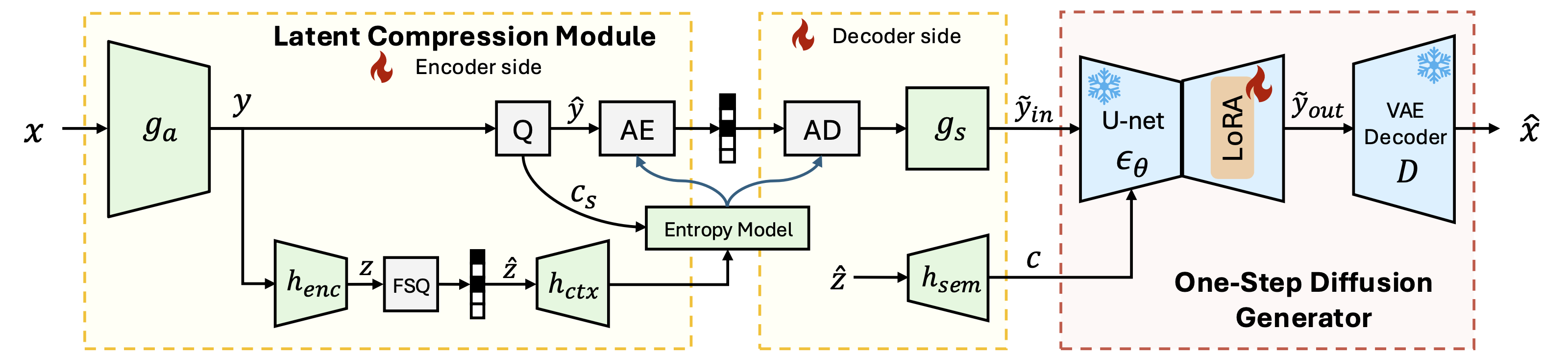

Framework overview of OneDC.

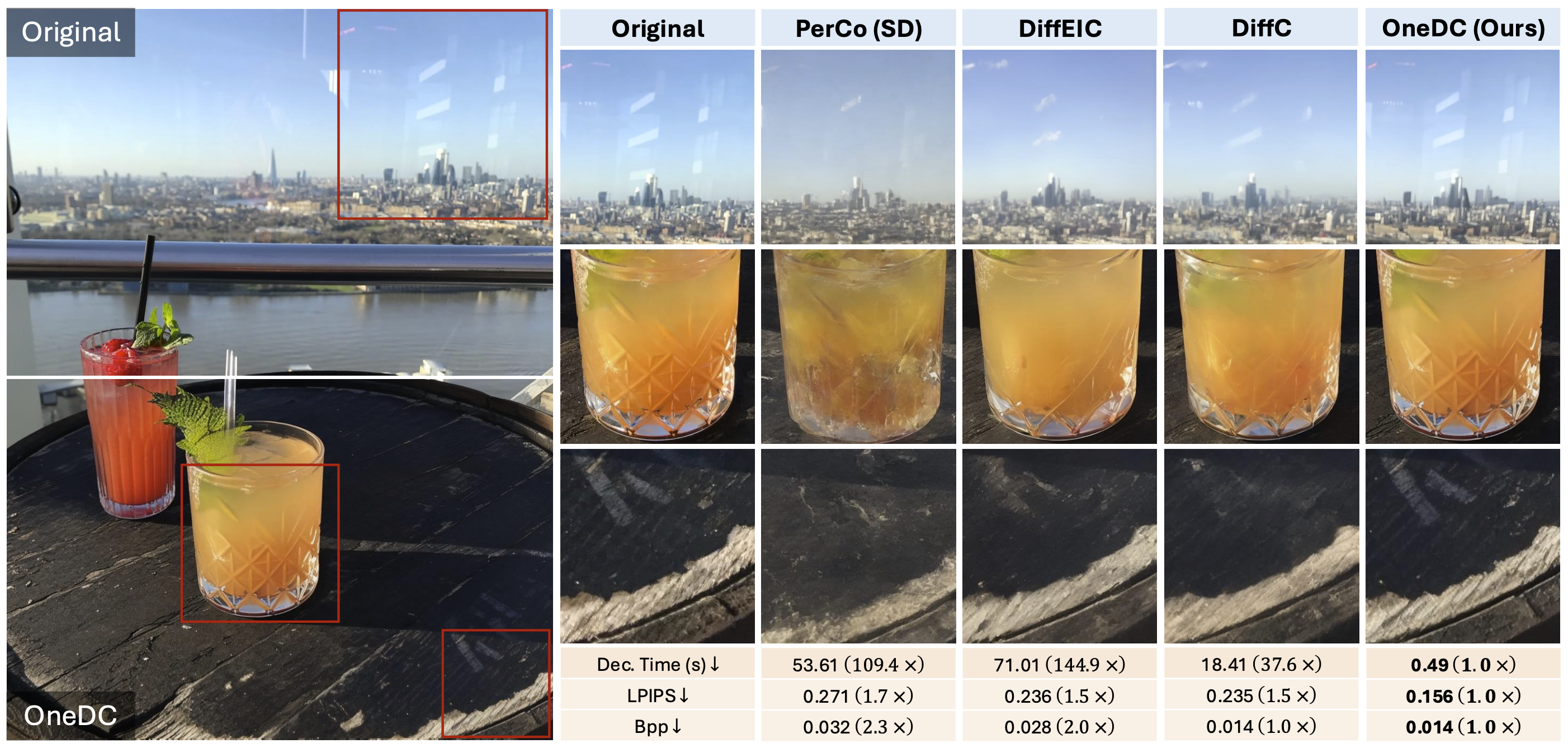

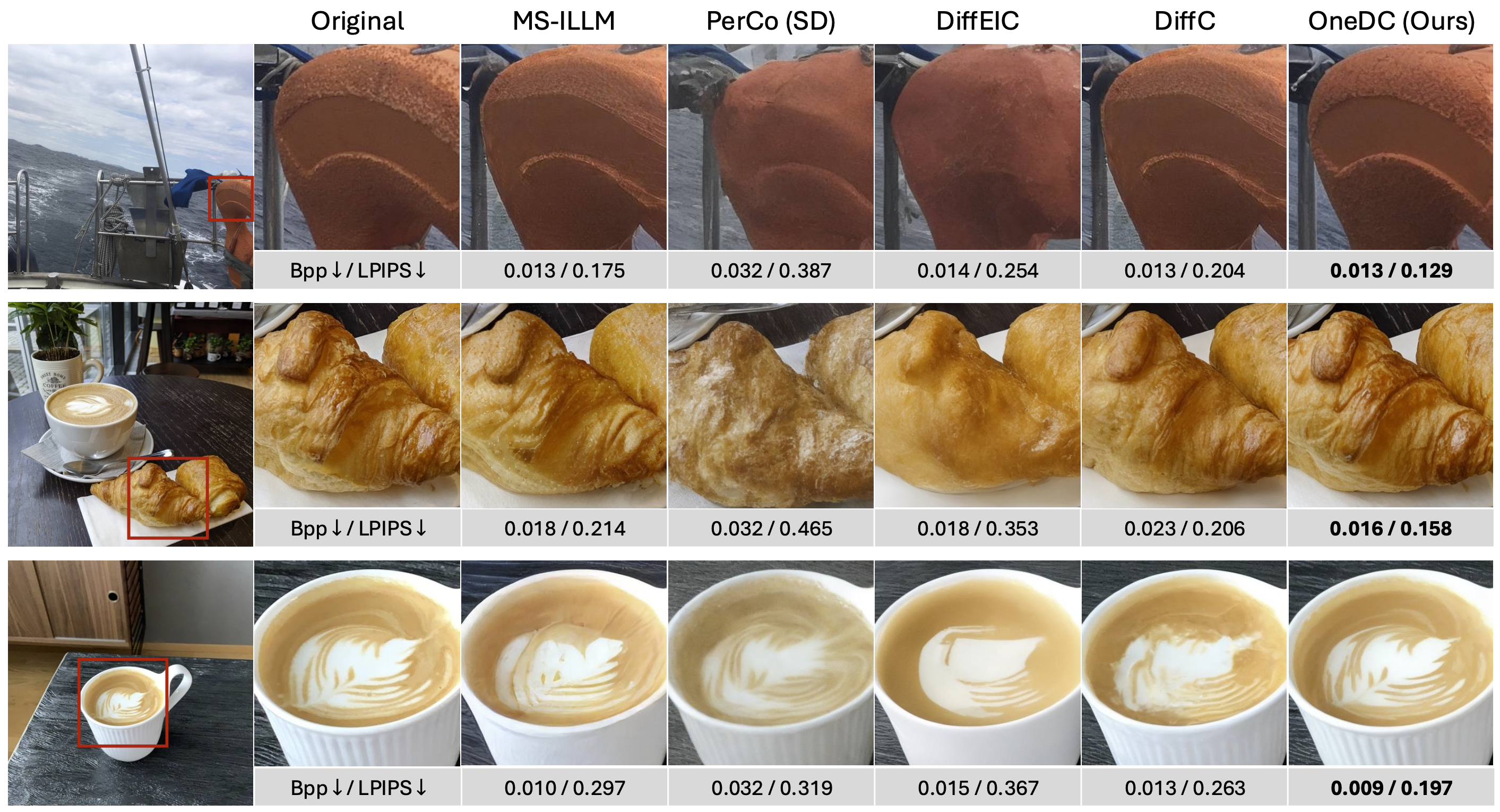

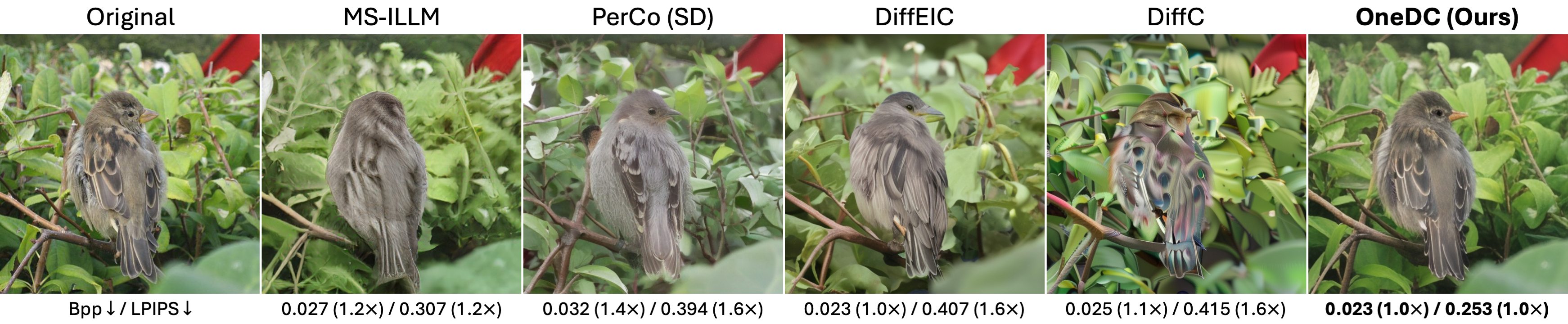

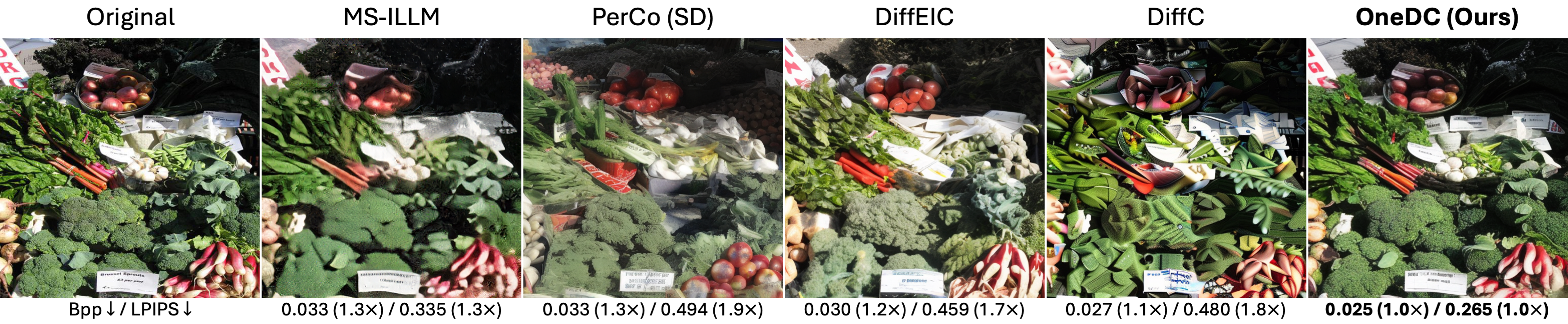

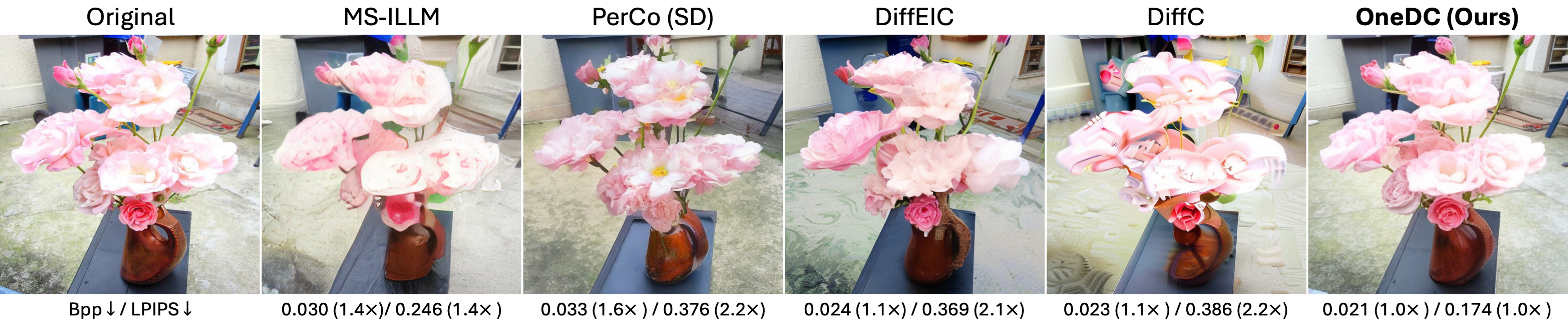

🏞️ Visual Examples

Poster

BibTeX

@inproceedings{xue2025one,

title={One-Step Diffusion-Based Image Compression with Semantic Distillation},

author={Naifu Xue and Zhaoyang Jia and Jiahao Li and Bin Li and Yuan Zhang and Yan Lu},

booktitle={The Thirty-ninth Annual Conference on Neural Information Processing Systems},

year={2025},

}